Summary and comprehension of papers: S.-E. Wei, V. Ramakrishna, T. Kanade, and Y. Sheikh. Convolutional pose machines. In CVPR, 2016.

A simple regression based implementation/VGG16 of pose estimation with tensorflow.

Approaches

A sequential architecture composed of convolutional networks that directly operate on belief maps from previous stages, producing increasingly refined estimates for part location.

Architecture and receptive fields of CPMs

![Architecture and receptive fields of CPMs, credit: [Wei et al., 2016] Architecture and receptive fields of CPMs](/images/pose-estimation/architecture-cpm.jpg)

The visualization of each layer and their parameters in browser.

CPMs consist of a sequence of convolutional networks that repeatedly produce 2D belief maps 1 for the location of each part. At each stage in a CPM, image features and the belief maps produced by the previous stage are used as input. The belief maps provide the subsequent stage an expressive non-parametric encoding of the spatial uncertainty of location for each part, allowing the CPM to learn rich image-dependent spatial models of the relationships between parts.

Pose Machine

Sequence of predictors produce increasingly refined estimates for each keypoint location.![Pose Machines, credit: [Varum et al., 2014] Pose Machines](/images/pose-estimation/pose-machines.jpg)

- Training reduces to training multiple supervised classifiers

- No structured loss function. No specialized solvers

- No handcrafted spatial model

- Spatial model is learned implicitly by the classifiers in a data-driven fashion

Convolutional Networks

- Learning Feature Representations: Convolutional Architectures for Feature Embedding

- Learning Context Representations: Large Receptive Fields as a Design Criterion

- larger receptive field captures longrange interactions between parts & incorporates more context information

- a large receptive field on both the image and the belief maps.

- Subsequent stages

- directly operate on belief maps from previous stages, producing increasingly refined estimates for part locations, without the need for explicit graphical model-style inference

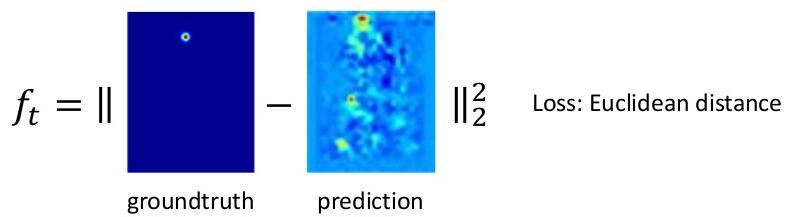

Learning & Training

The overall proposed multistage architecture is fully differentiable and therefore can be trained in an end-to-end fashion using backpropagation.

- Jointing training with periodical intermediate supervision replenishes gradients and guides the network to produce increasingly accurate belief maps

- Network without intermediate supervision leads vanishing gradients.

![Joint training with intermediate supervision, credit: [Wei et al., 2016] Joint training with intermediate supervision](/images/pose-estimation/intermediate-supervision.jpg)

Evaluation Metrics

- Percentage of Detected Joints (PDJ)

- measures the performance using a curve of the percentage of correctly localized joints by varying localization precision threshold, which is normalized by the scale defined as distance between left shoulder and right hip

- invariant to scale

- Percentage of Correct Parts (PCP)

- measures the percentage of correctly localized body parts

- A candidate body part is treated as correct if its segment endpoints lie within 50% of the length of the ground-truth annotated endpoints.

Implementation

The source code of CPM implemented with caffe are provided by the authors. A tensorflow version implementation can be found here. I test some images with the pretrained model of the tensorflow version but the results are terrible. But you can safely refer to the CPM model definition in tensorflow.

Here I just try to train and test some neural convolutional networks with my toy dataset for human pose estimation including:

- Simple several convolutinal layers network

- VGG16

My code is based on JakeRenn’s repository.

The results/model are not good enough (or terrible) currently. Continue reading if you are interested in simple/fast neural network experiments for pose estimation.

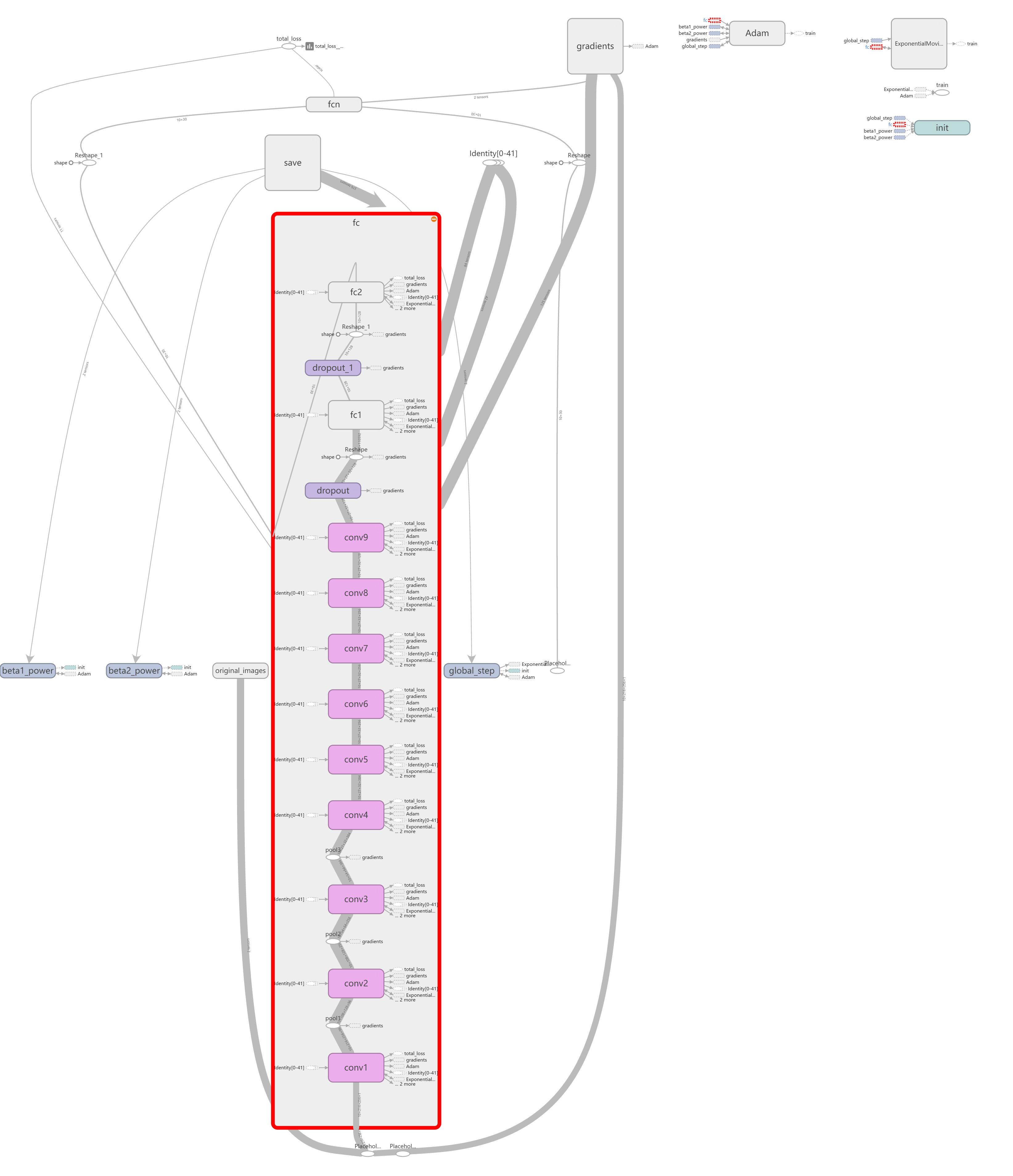

Original Convolutional Network

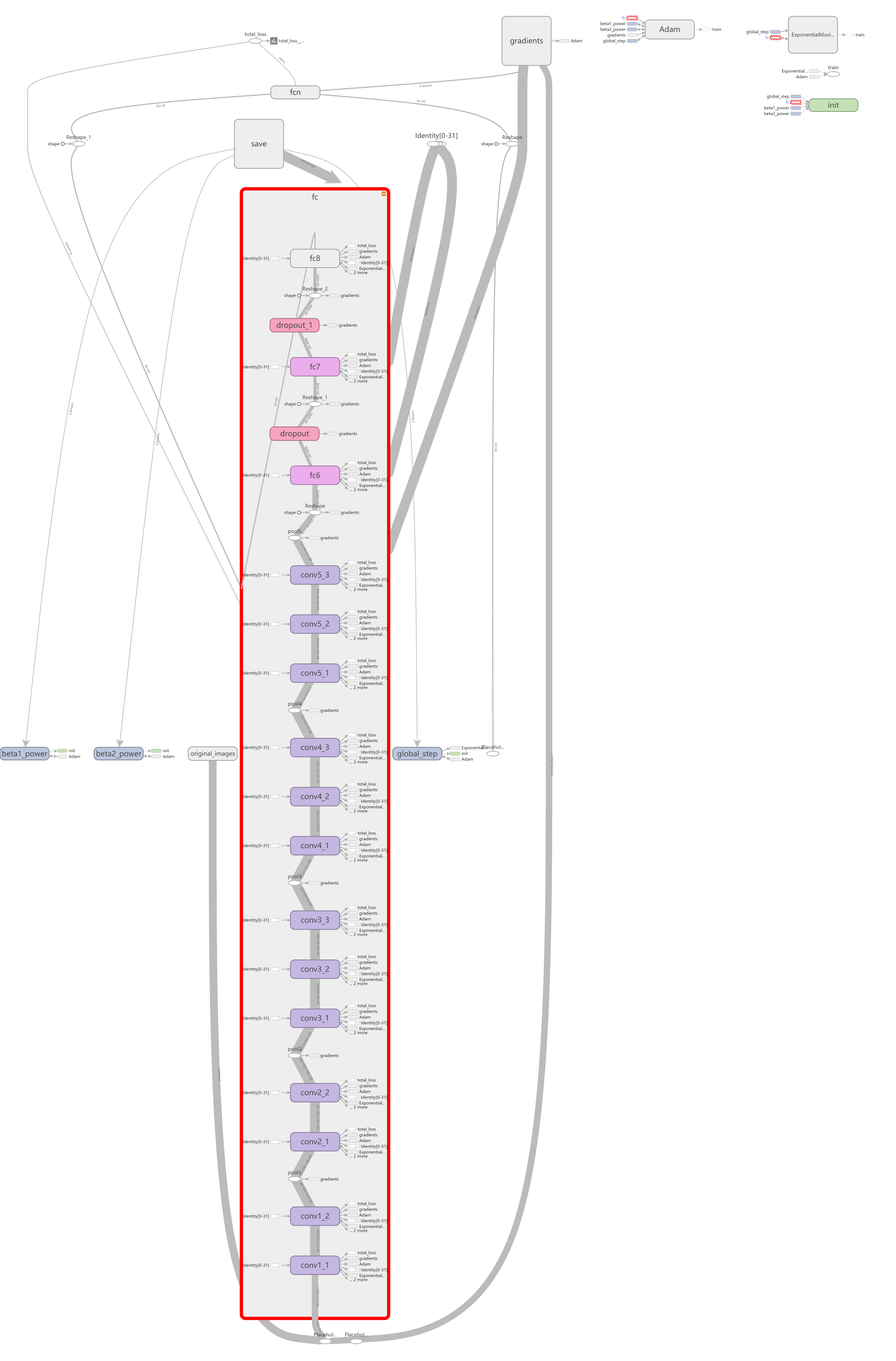

Well, at first, I try to train with this network defined in the original repository (open the image in a new window to see the original big image):

But the results are identical for all images which indicates some layers’ weights are zeros and points only controlled by bias values.

4 Convolutional Layers Network

So I reduce the convolutinal layer number to 4, which is better than 5 or 3. Let’s call it 4ConvNet

|

|

Now we can get different points for most test images.

(batch size:20, iter: 50000)

While the overall points shift and only some parts (e.g. right hand) are sensitive to different pose.

For the moment, train images perform much better.

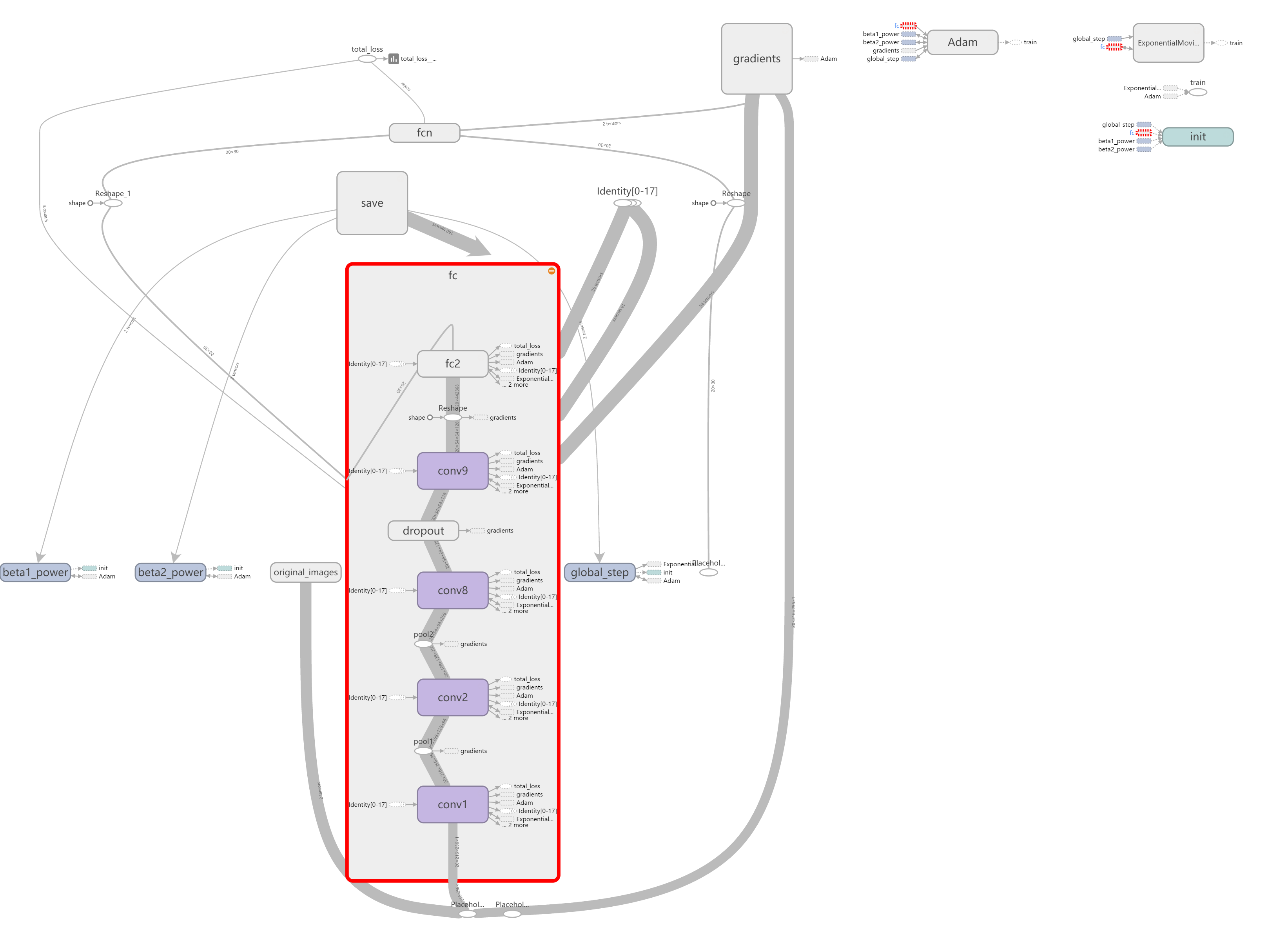

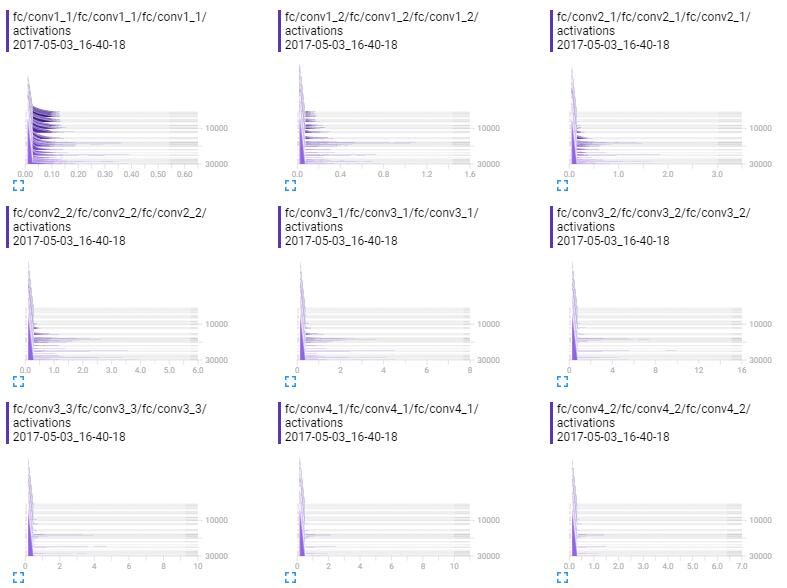

VGG16 Network

It seems impossible to get better results with simply reducing or increasing layers. I tried to train with VGG16 Net.

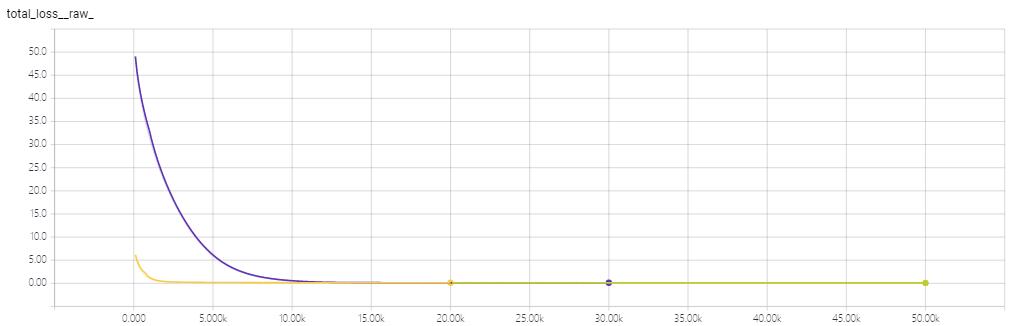

(batch size:20, iter: 30000)

It performs much better than the 4ConvNet models.

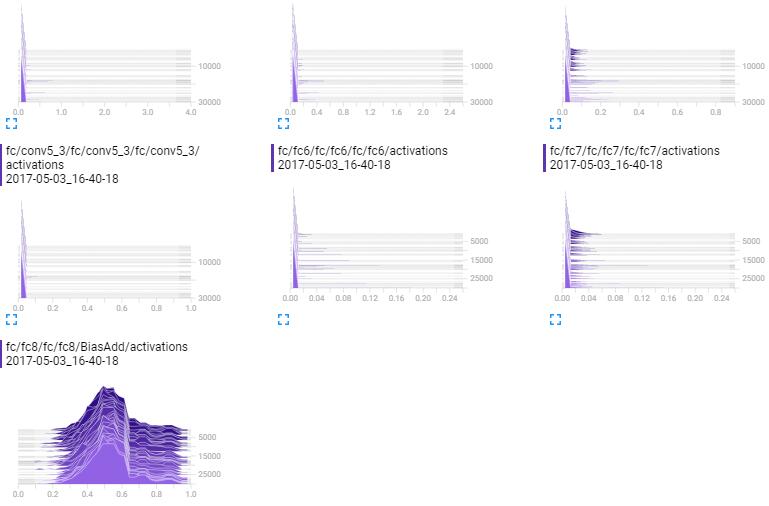

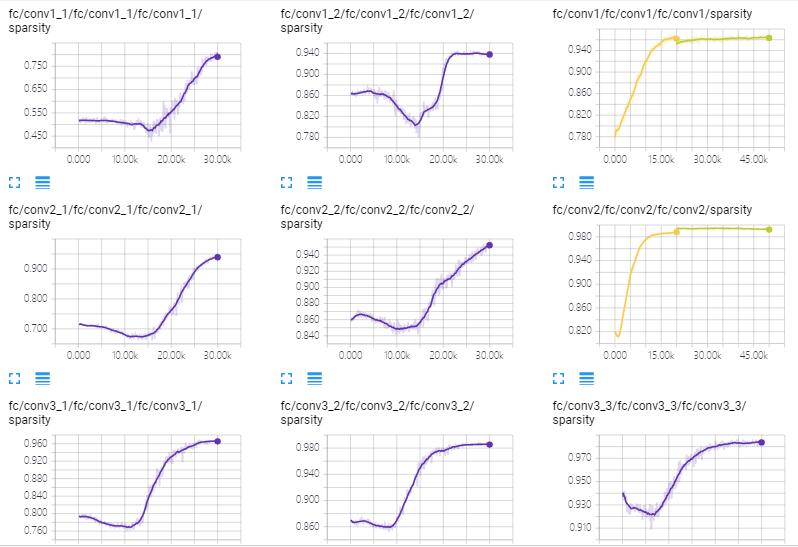

For more details, we can see each layer’s histograms with tensorboard. Weights distributed over zero in general but not absolutely.

Compare 4ConvNet and VGG16

Loss of VGG16 model much higher than 4ConvNet initially. 4ConvNet model converges quickly and result lower loss(0.09 < 0.13 at iter 30K). But 4ConvNet has higher sparsity (zero fraction) in general.

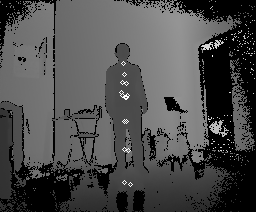

Data Preprocess

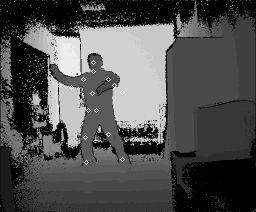

Each grayscale image is 256*212px, 13.3KB.

pose_estimation/data/input/test_imgs: ~4000 test imagespose_estimation/data/input/train_imgs: ~60000 train images, use half of them

Each of them are labeled with 15 articulate points.

Data Cleaning

Draw all train images with 15 articulate points, check them one-by-one to find out wrongly labeled images. Each set of 10K images has about 350 those dirty images. Clean them out of train images effectively improve the accuracy.

The following images are the results of applying model trainging with uncleaned data, in which some points fly away.

(batch size:20, iter: 30000)

Data Augmentation

Distort images by random rotation and flipping didn’t gain effective improvement in my experiments because the person stands vertically in most of my images. I think random horizontal shifting will because the points of test results shift both horizontally and vertically from the correct points in many cases.

Future Work

Paper reading/Reimplementation: Zhe Cao, Tomas Simon, Shih-En Wei and Yaser Sheikh. Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. In CVPR, 2017